In the previous article of this threefold series on fail-safe design, we have seen how an embedded transactional file system like TSFS can be used to build fail-safe applications by protecting individual files against data and metadata corruption. In this third article, we now show that TSFS transactions go beyond preserving file-level integrity, and can also be used to enforce coherence across multiple files and directories. To support the discussion, we present a real-life application example and demonstrate how a single additional call to tsfs_commit() is all that is needed to make the code immune to unexpected failures.

Consider sensor data to be read into a file at regular intervals. New readings are appended at the end of the file — which we will call the log file. When the log file reaches a given maximum size, its content is compressed and stored in an archive file. A circular index of the archive files is maintained in a separate file. When the index is full, the oldest archive is deleted and removed from the index (hence circular index). In our simple implementation, the index sole purpose is to provide the needed archive ordering information (namely, which is the oldest). However, it could be used to store other useful archive metadata, such as start/end timestamps or minimum/maximum recorded values.

Listing 1 shows a possible implementation of the top-level sensor recording function. The provided rd_cb() callback presumably reads from some kind of sensor and returns a 64-bit value to be recorded. The log_append() function appends the given value to the internal log file and reports whether the log is full. If the log is full, a new archive is created using archive_gen() and the log is reset using log_reset(). When the maximum number of archives is reached, the oldest archive is removed using oldest_archive_remove().

int sensor_record(const char *root_dir_path,

read64_cb_t rd_cb, void *p_cb_data)

{

uint64_t val;

bool full;

while (1) {

// Read one value from the source.

rd_cb(p_cb_data, &val);

// Append the read value to the log.

log_append(root_dir_path, val, &full);

// If the log is full, archive it.

if (full) {

// Create the new archive.

archive_gen(root_dir_path, &full);

// If the archive index is full, remove the oldest archive.

if (full) {

archive_remove_oldest(root_dir_path);

}

// Reset the log file.

log_reset(root_dir_path);

}

}

}

Unexpected Failures Without Transactions

Now, the usual question: what happens in the event of an unexpected failure? Well, as we move on from toy examples to (closer to) real-life examples, the number of possible outcomes explodes, such that we cannot possibly spell out each possible failure scenario. So let’s change our approach a bit and consider instead the reverse question: what makes it so much easier to design, write and analyze code, given an hypothetical failure-free execution?

Designing, writing and understanding storage-related code is much easier in an imaginary failure-free world because we can rely on loop invariants, i.e. statements that are invariably true at the beginning of each loop iteration. Looking at Listing 1 and considering the state of the recording module at the beginning of the loop iteration, the following invariants can be identified:

- The log is not full.

- The archive index is not full.

- Readings are either in the log file or an archive file, but not both.

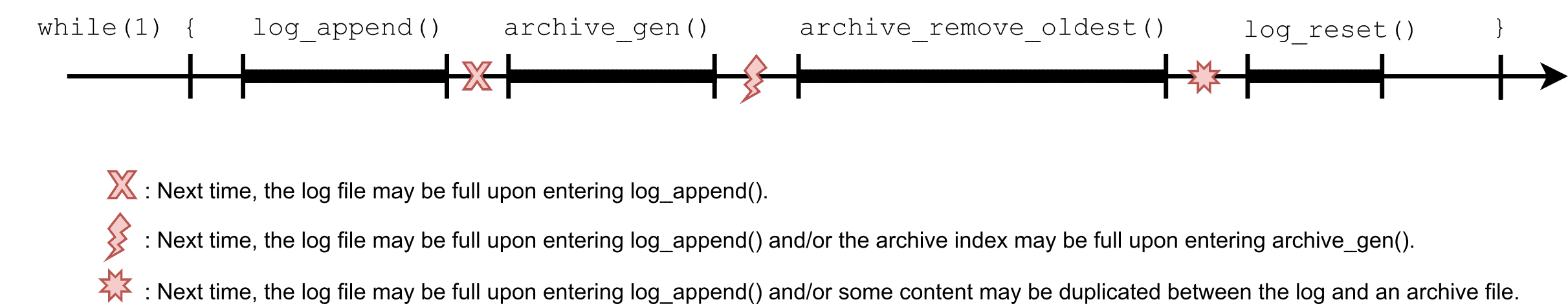

Now, back to the real world (where failures do occur!), a quick survey of the possible failure timings indicates that none of the above-mentioned invariants holds true anymore (see Figure 1). And that, even if the metadata is not itself vulnerable to corruption (say because a journaled file system is used). This is because, unlike the examples of previous articles, this example is not just about file-level integrity but rather about coherence across multiple files and directories.

As you can imagine, the fewer assumptions we can make about the on-disk state — and in particular about inter-file consistency —, the more different situations must be accounted for in the application code. And as these situations arise from rare failures, they tend to be difficult to reproduce and test.

To further illustrate the complexity of the situation at hand, let’s dig a little bit deeper. Take the archive_gen() implementation shown in Listing 2 for instance. Note that, for clarity, error handling as been purposely omitted, as well as unneeded file compression code details. With this implementation in mind, consider once more the on-disk state at the beginning of a loop iteration. In a failure-free context, we can expect the following additional invariants to hold true:

- Each archive file has a corresponding entry in the archive index.

- An archive file is either complete or does not exist.

Again, in the event of unexpected failures, none of these assumptions remains valid, and the application code must be consequently adapted. As we include more and more fail-safety considerations, the application code complexity is quickly growing. But there is worst.

Some failures cannot possibly be recovered at the application-level. This is the case of the newest_ix on-disk update if it happens to cross a sector boundary. Even on a media supporting atomic sector updates, if a failure occurs between the two needed sector updates, the value can be corrupted in ways that the application does not expect or cannot even detect.

static uint64_t archive_gen(const char *root_dir_path, bool *p_full)

{

tsfs_file_hndl_t index_fh, arch_fh, log_fh;

uint64_t id;

char t_path[MAX_LOG_FILE_PATH_LEN];

tsfs_file_size_t sz;

uint32_t oldest_ix, newest_ix;

bool eof;

// Open the archive index file.

sprintf(t_path, "%s/index.dat", root_dir_path);

tsfs_file_open(t_path, &index_fh);

// Fetch the oldest and newest entry indexes.

tsfs_file_read(index_fh, &oldest_ix, sizeof(oldest_ix), &sz);

tsfs_file_read(index_fh, &newest_ix, sizeof(newest_ix), &sz);

// Allocate a new id for the archive to be created.

id = archive_id_alloc();

// Create and open the archive file.

sprintf(t_path, "%s/log_%llu.lz", root_dir_path, id);

tsfs_file_create(t_path);

tsfs_file_open(t_path, &arch_fh);

// Open the log file to be archived.

sprintf(t_path, "%s/log.dat", root_dir_path);

tsfs_file_open(t_path, &log_fh);

// Compress and write to the archive file.

eof = false;

while (!eof) { /* Read, compress, write, one chunk at a time. */ }

// Close archive and log files.

tsfs_file_close(log_fh);

tsfs_file_close(arch_fh);

// Add the newly created archive path to the circular archive index.

newest_ix = (newest_ix + 1) % MAX_LOG_FILE_ENTRY_CNT;

tsfs_file_seek(index_fh, sizeof(oldest_ix), TSFS_FILE_SEEK_SET);

tsfs_file_write(index_fh, &newest_ix, sizeof(newest_ix), &sz);

tsfs_file_seek(index_fh, newest_ix * sizeof(id), TSFS_FILE_SEEK_SET);

tsfs_file_write(index_fh, &id, sizeof(id), &sz);

// Close the index file.

tsfs_file_close(index_fh);

// Determine whether the index is full and exit.

*p_full = (newest_ix == oldest_ix);

return (RTNC_SUCCESS);

}

Unexpected Failures With TSFS Transactions

As discussed in the second article of this three-part series, using TSFS transactions, the file system state is guaranteed to be returned to that of the latest commit in the event of an untimely interruption. With that in mind, we can now modify the top-level code presented in Listing 1 to make it fail-safe.

In fact, the only required modification is the addition of the tsfs_commit() call at the beginning of the loop, such as shown in Listing 3. Notice that, for the whole design to be fail-safe, only the top-level must be modified. The helper functions can remain untouched. Also, the commit function need not be called at each iteration as, coherence wise, it is perfectly acceptable to loose a certain amount of readings in the event of a failure.

int sensor_record(const char *root_dir_path,

read64_cb_t rd_cb, void *p_cb_data)

{

uint64_t val;

bool full;

unsigned long iter_cnt = 0u;

while (1) {

if (iter_cnt % COMMIT_INTERVAL == COMMIT_INTERVAL - 1u) {

rtn = tsfs_commit(root_dir_path);

if (rtn != RTNC_SUCCESS) break;

}

// Read one value from the source.

rd_cb(p_cb_data, &val);

// Append the read value to the log.

log_append(root_dir_path, val, &full);

// If the log is full, archive it.

if (full) {

// Create the new archive.

archive_gen(root_dir_path, &full);

// If the archive index is full, remove the oldest archive.

if (full) {

archive_remove_oldest(root_dir_path);

}

// Reset the log file.

log_reset(root_dir_path);

}

iter_cnt++;

}

}

Now, consider the implications of this added commit function call in terms of the loop invariants discussed earlier:

- The log is not full.

- The archive index is not full.

- Readings are either in the log file or an archive file, but not both.

- Each archive file has a corresponding entry in the archive index.

- An archive file is either complete or does not exist.

Regardless of the possible interruptions, these invariants always hold true. When the code is first executed these invariants are trivially true — assuming that the setup code has created the appropriate empty log and archive files. Then, either the execution makes it to the next iteration, in which case invariants are still true (by definition), or the execution fails and the on-disk state is returned that of the latest commit. And since the call to tsfs_commit() is located at the beginning of the loop, the invariants remain true in this case as well.

From a practical standpoint, this is extremely powerful because, from the moment that transaction boundaries have been defined, the application designer can think and code as if the application was running in a failure-free world, ignoring all possible untimely interruptions and their intricate consequences on the overall application behaviour.

Conclusion

In this third article on fail-safe design, we have seen how even the simplest applications can translate into unbearable complexity when taking into account possible interruptions. We have seen that the mere enumeration of the possible failures and their consequences — leaving alone designing the needed recovery paths — is a tedious task.

We have then shown how TSFS write transactions can be used to enforce a well-defined behaviour, by preserving coherence across multiple files and directories. Through a concrete code example, we have shown that a single tsfs_commit() function call is all that is needed to build a failure-proof implementation, providing the application designer with a failure-free execution abstraction.

Click here to read part 1 and part 2 of this article series.

Questions or comments? Do not hesitate to contact us at blog@jblopen.com. Your questions, comments and, suggestions are appreciated.