In this article, we discuss 4 popular NOR file systems, commonly used in bare metal and RTOS-based embedded systems: LittleFS, Yaffs, FileX and TSFS. We provide a brief description of each file system and compare their performance, RAM consumption and other specific advantages and limitations. Of course, there are other file systems and storage solutions out there, and we could not possibly evaluate all of them. We hope, however, that this article can serve as a blueprint to evaluate other solutions and shed some light on crucial reliability and performance aspects to look out for.

Overview

LittleFS is an open source (BSD-3-clause license) file system primarily designed for NOR flash. It prioritizes low RAM usage over performance and is best suited for small platforms and very light workloads. Random read accesses are very fast but write performances are generally poor. Random write access, in particular, are barely even possible given the extremely high level of write amplification and the correspondingly low throughput.

Yaffs is a dual-licensed (GPL and commercial) file system primarily focused on NAND flash devices, although it is commonly used and offers very good performances on NOR flash. It can run on a variety of host platforms, including Linux-based systems, as well as bare-metal and RTOS-based systems. Yaffs keeps the entire file system structure in RAM, producing high performances at the expense of a larger footprint.

FileX is an open source (MIT license), fail-safe implementation of the FAT file system. FileX does not support raw flash on its own. Raw flash support is obtained through a separate flash translation layer called LevelX. The FileX/LevelX combination provides decent performances on NOR flash, although some workloads can be problematic on memory-constrained platforms. Another limitation comes from the use by LevelX of multi-pass programming to reset bits inside a page that was already programmed without erasing first. That does not work on all devices, or could require that the built-in ECC be turned off.

TSFS is a commercially licensed transactional file system for bare-metal and RTOS-based systems with native support for raw flash (NOR and NAND). It produces the highest overall performances of the bunch, including for small accesses down to 256 bytes, and using only 8KiB of RAM. TSFS supports managed flash devices like SD card and eMMC as well.

Comparisons of the File Systems

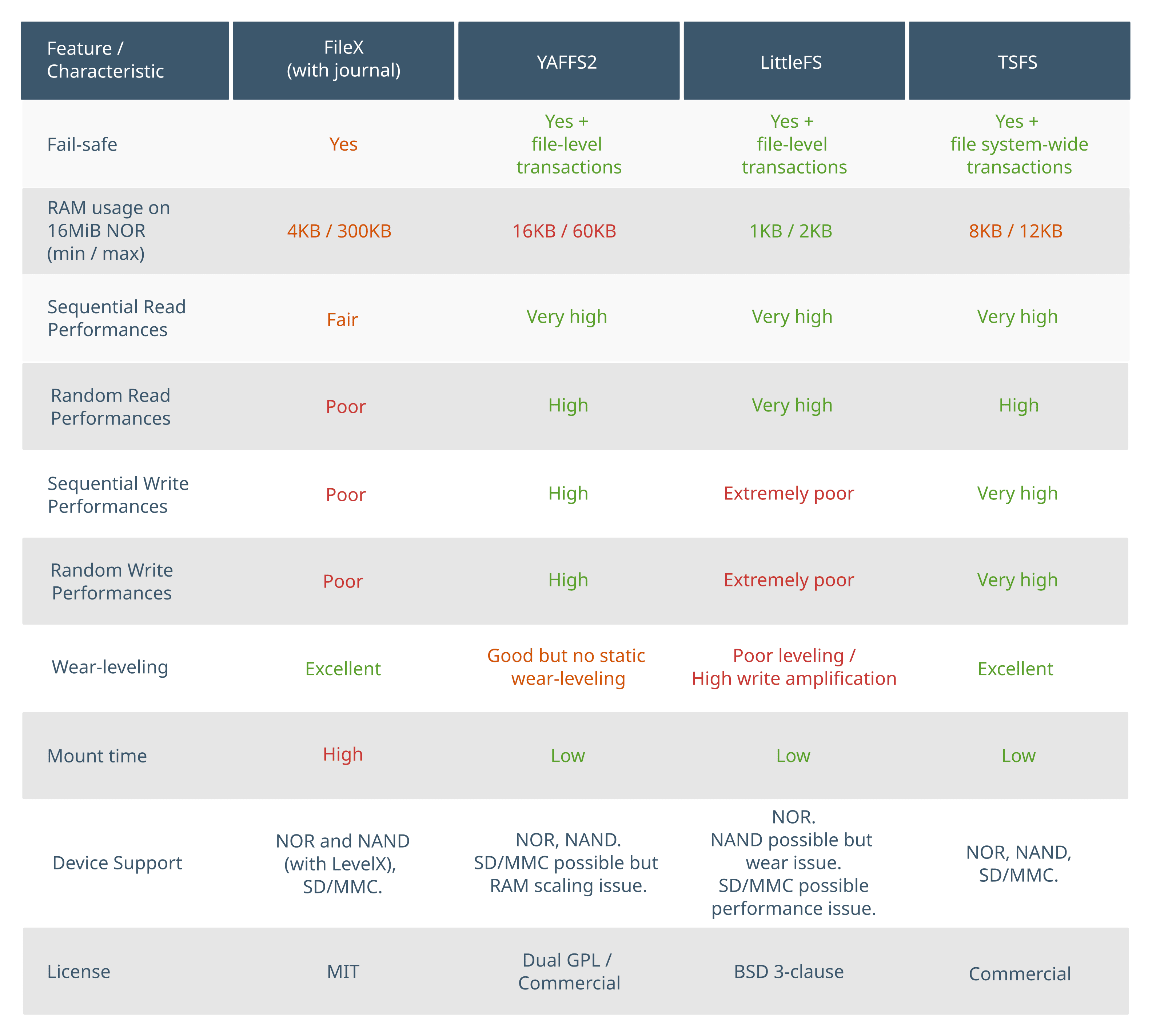

Table 1 summarizes how LittleFS, Yaffs, FileX and TSFS compare in terms of important file system characteristics. The rest of this article details how we came to these conclusions.

How we measure performances

For the purposes of this article, performances (including read/write throughput, wear-levelling and mount time) are measured using NOR flash simulation. The same simulation is used for all file systems and takes the following into account:

- bus speed and configuration: 80MHz QSPI bus for a 40MB/s throughput.

- Load latency (i.e. QSPI address, command and dummy cycles): 8 cycles per read access.

- program and erase time:

The simulation does not include other system-level limiting factors, such as CPU or RAM access overhead. Those have no or limited impact on write performances, but the effect can be more significant for small read accesses, something to keep in mind when interpreting read benchmarks. Despite these limitations, we use simulation for file system comparison as it is much faster and allows us to perform more benchmarks with varying parameters, that would otherwise take days or weeks to complete.

Fail-Safety

LittleFS, Yaffs, FileX and TSFS are all fail-safe, in the sense that neither of them can be corrupted by a power failure or other untimely interruption. This is not to say, however, that they provide the same guarantees. To illustrate some of the differences, consider a hypothetical application where log files are filled, one entry at a time, and then archived upon reaching a maximum size. To make things interesting, suppose that a log entry is large enough that it cannot fit in RAM. Instead, each entry is stored through successive, smaller write accesses, as data comes in from, say, sensors or some communication link. When a full log is archived, the original log is deleted. The oldest archive is also deleted to keep the number of archives under a desired maximum. Table 2 shows the various integrity and consistency guarantees provided by each file system.

FileX provides fail-safety through journalling. Each update is recorded in advance into a dedicated intent log (or journal) before the file system is actually modified. FileX identifies interrupted operations from the journal, and either completes or rolls back partial modifications upon recovery, ensuring that the file system always returns to a consistent state. FileX provides atomic file-level operations, which is unlike other journalled FAT implementations.

LittleFS, Yaffs and TSFS all leverage copy-on-write (required for native flash support) to extend atomicity to arbitrarily long, application-delimited sequences of file updates (as opposed to a single update for FileX). In the case of LittleFS and Yaffs, file modifications are not effective until the updated file is either closed or synchronized (at the application request). When that happens, the file system atomically switches to the new, modified state, leaving no room for partial modifications. TSFS pushes this all-or-nothing behaviour a bit further, with transactions. Transactions are atomic update sequences containing any number of modifications to any number of files or directories (including file update, creation, deletion, renaming, truncation, etc.).

RAM Usage

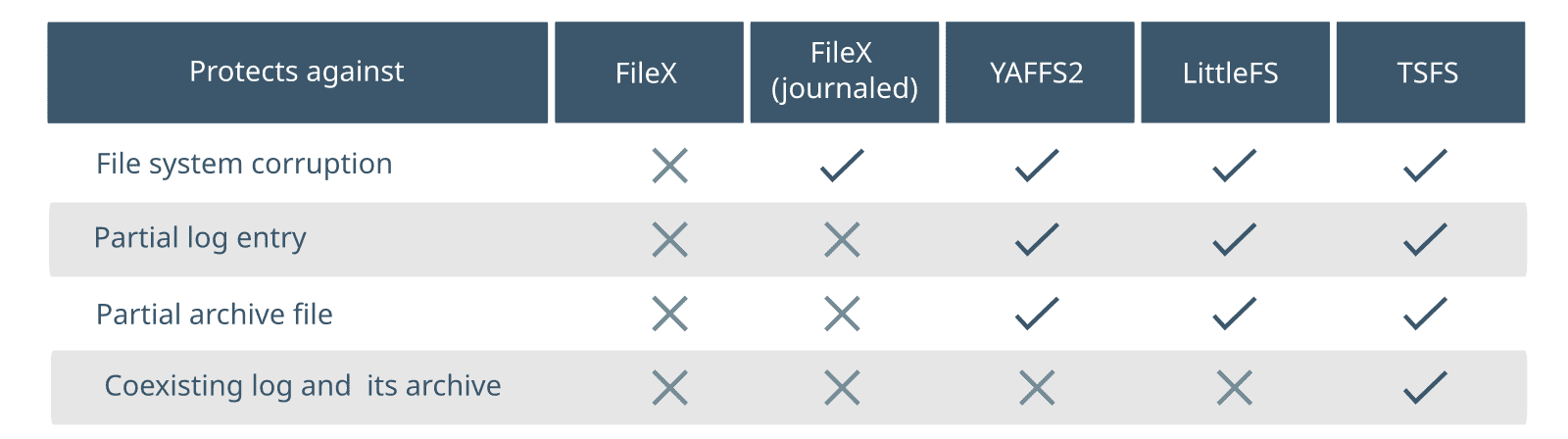

Figure 1 shows RAM requirements for each file system, ranging from the absolute minimal configuration, up to a maximum performance configuration beyond which only marginal gains can be realized.

Focusing first on the minimum RAM requirement, LittleFS stands out as the less demanding (~1KB), followed by FileX (~4KB) and TSFS (~8KB). Yaffs has the highest minimum RAM requirement of the bunch (~16KB on a 16MiB NOR).

Focusing first on the minimum RAM requirement, LittleFS stands out as the less demanding (~1KB), followed by FileX (~4KB) and TSFS (~8KB). Yaffs has the highest minimum RAM requirement of the bunch (~16KB on a 16MiB NOR).

LittleFS and TSFS both provide a near-constant level of performance, regardless of the configuration. In the case of LittleFS, read performances are excellent with no caching at all, while write performances are terrible for fundamental design reasons that cannot be overcome with more RAM. TSFS, on the other hand, offers very high performances across the board, with only a slight improvement in random read performances as more RAM is added.

Yaffs allows a trade-off between performance and RAM usage through the chunk (sector) size configuration. A lower chunk size comes with a higher RAM usage but provides improved performances for small accesses. Conversely, a higher chunk size decreases the RAM usage, but increases write amplification for smaller access. For reference, a 4KiB sector size configuration for a 16MiB NOR requires around 16KB of RAM, while a 1KiB sector size configuration requires around 50KB.

FileX RAM usage calculation is trickier. Performances, especially for random accesses, largely depends on the size of the internal FAT entry cache. On NOR flash (and other small devices), large clusters can be used to minimize the size of the FAT table and the amount of cache needed to absorb most of the costly cluster chain lookups. When journalling is switched on, however, a large cluster size comes with increased write amplification (because clusters are copied upon modification), so there is a trade-off there. Also, the FTL generates a lot of extra read access as part of garbage collection. Those can only be absorbed with much larger amounts of caching RAM. For the best possible performances, up to a few hundred kilobytes of RAM might be needed, depending on the access size, the access pattern and the size of the working set.

Write Performances

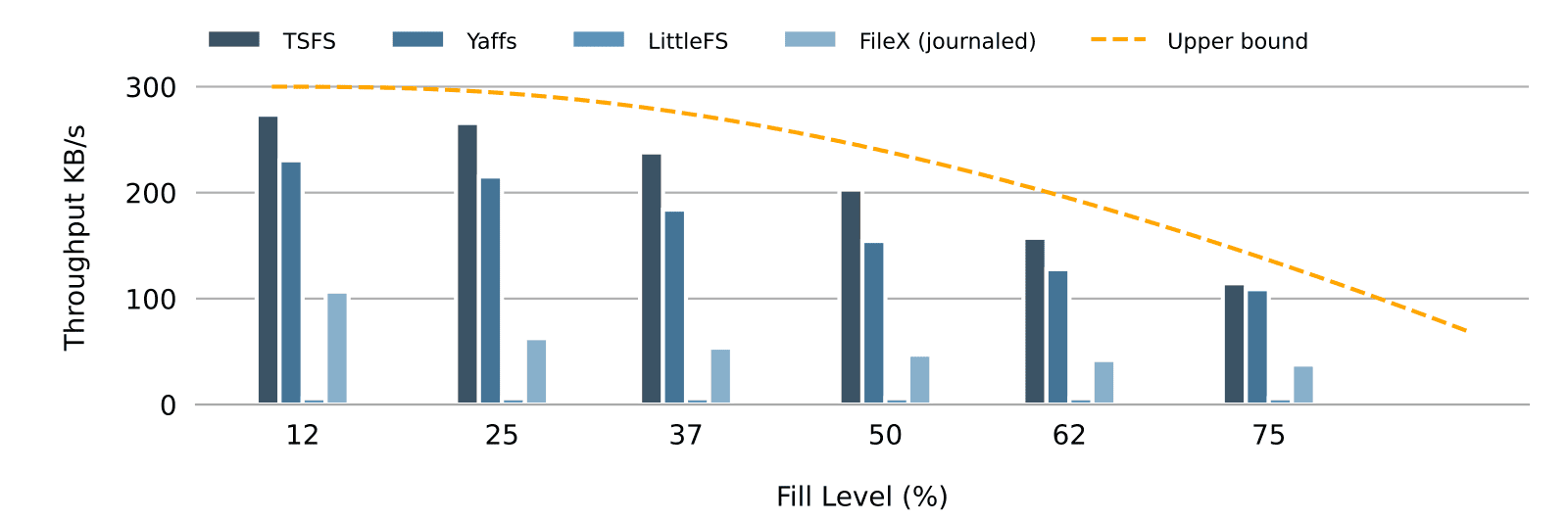

Compared to NAND and NAND-based storage devices, NOR flash offers limited raw write performances: at most, 300KB/s of sustained program/erase throughput with a large maximum access time of hundreds of milliseconds dictated by the block erase operation. The worst-case access time can be improved by erasing smaller sub-sectors when supported, but only at the cost of a lower average throughput. In any case, there is not much room for waste, which makes it even more crucial to watch for write performances when comparing file systems. Of course, write performances depend on the workload (access pattern and size). Perhaps less obviously, it also depends on the amount of free storage space available.

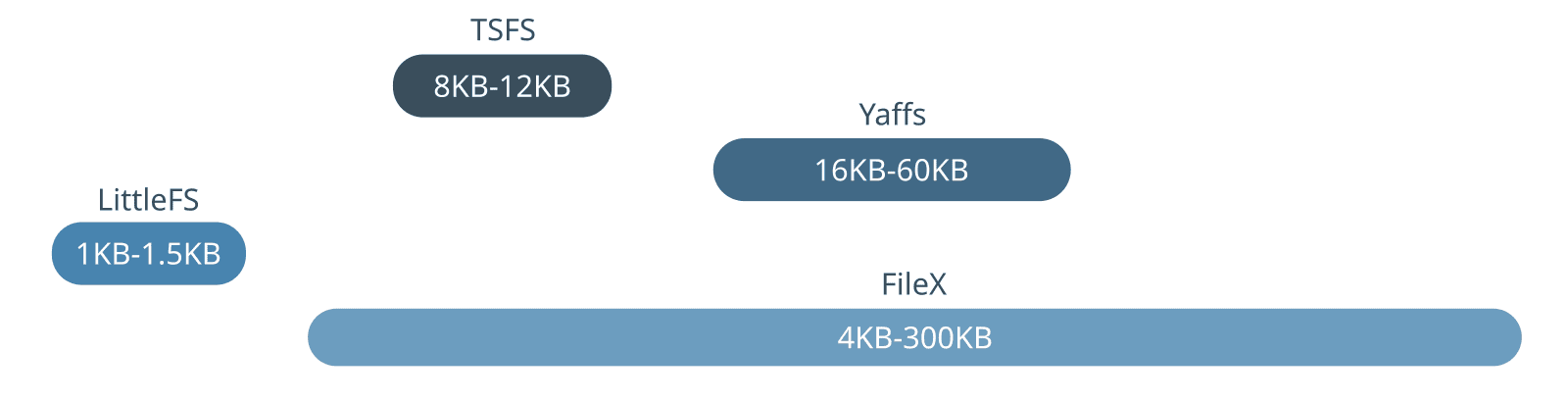

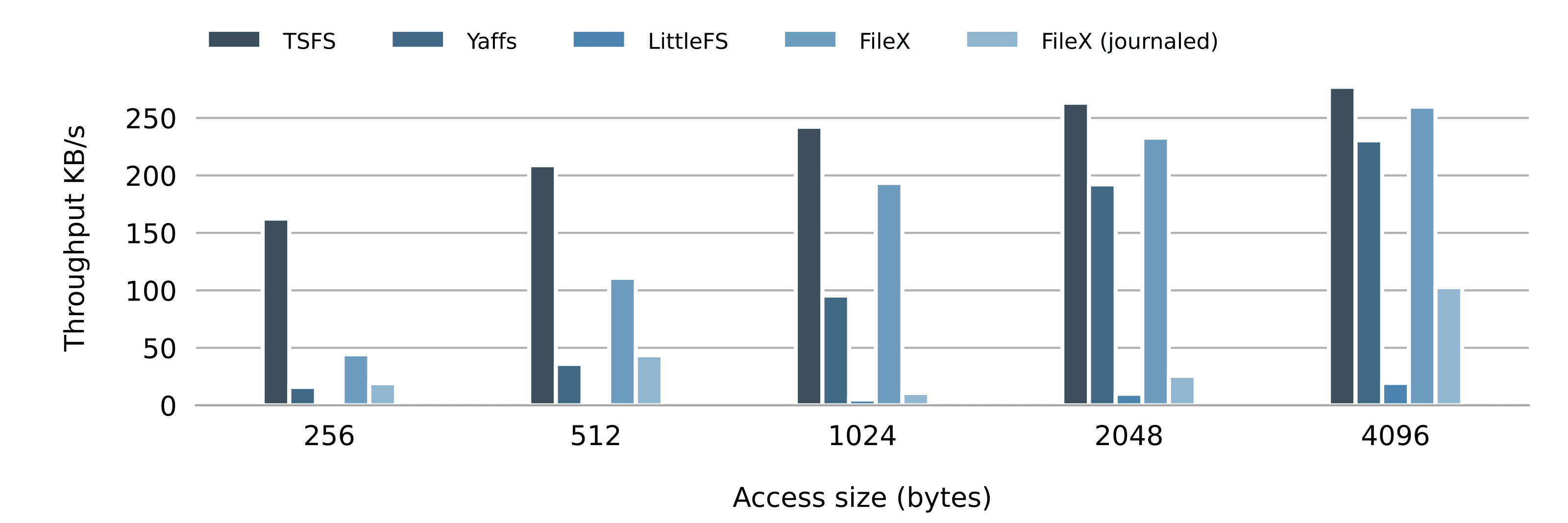

Figure 2 shows sequential write throughputs for each file system and for various access sizes. The size of the test file is 8MiB. The size of the simulated NOR is 16MiB. The access position is incremented until the end of the file is reached, at which point the position is reset. In the case of LittleFS, the file is deleted upon reaching the end of the file as, otherwise, write speed slows down to a crawl (more on that later on). Each access is completed, down to the storage device, before the next access begins. In the case of Yaffs and LittleFS, this means opening and closing the test file before and after each access. In the case of TSFS, this means calling the dedicated commit function after each access. For this benchmark, FileX is attributed 32KiB of RAM for internal caches, about the same amount of RAM than Yaffs uses for a middle of the road 1KiB sector configuration. LittleFS uses 1.2KB of RAM and TSFS around 8KB.

Figure 3 shows random write throughputs for each file system and various access sizes. For this benchmark, the position is uniformly distributed across the entire 8MiB file. Other than that, the same general conditions and configurations apply than for the sequential access benchmark.

Figure 4 shows the random write throughput of each file system, for various working set sizes (expressed as a percentage of the media size). The orange line represents an upper bound. How we determine this upper bound is explained in details in this article.

In all scenarios, LittleFS stands out with extremely poor write performances. There are two main reasons for this:à

- When appending data, the content of the last block in that file must be copied over to a new block. Each new update therefore triggers a block erase which makes for a very low write throughput and considerably shortens the device lifetime (see a description of this issue here).

- When writing to some arbitrary location within a file, any content located after the update must be rewritten to maintain the backward linked list attaching the file blocks, as described in the LittleFS documentation.

It seems like the append issue might be resolved in the future, but there is little hope for the second issue (the random access issue) which is the consequence of a fundamental design choice.

In the case of FileX, performances are limited by a few different factors:

- Journalling comes with additional write accesses to the journal: before the actual file update, to log the operation to come, and once the update is completed, to reset the journal. These accesses must be strictly ordered which makes write-back caching useless. As a matter of fact, enabling the fault tolerant mode in FileX causes a significant drop in write throughput.

- With fault tolerant mode enabled, FileX uses out-of-place cluster updates. Even when a single sector is updated, the whole cluster must be copied over to the new location which increases write amplification and reduces write performances.

- Random access performances largely depends on caching, which might not be available on RAM-constrained platforms. FileX (like other FAT implementations) has a \(\mathcal{O}(n)\) lookup time complexity (\(n\) being the number of clusters) and LevelX has a \(\mathcal{O}(k)\) lookup time complexity (where k is the number of sectors). Combined, we are looking at a \(\mathcal{O}(n^2)\) time complexity (neglecting the constant cluster/sector ratio).

- For accesses smaller than the minimum sector size (512 bytes), FileX must perform a read-modify-write sequence. In the case of 256-byte accesses, for instance, the throughput is roughly cut in half compared to an optimal blind write (neglecting the extra reading).

In the case of YAFFS, high write performances are expected since YAFFS keeps the entire file system structure in RAM. For smaller file updates however, YAFFS is significantly less efficient. There are two main reasons for this:

- YAFFS must write one extra chunk upon closing or synchronizing a file to mark the end of an atomic file update. For a single-chunk write, the write throughput is thus cut in half.

- YAFFS2 does not support chunks smaller than 1KiB, so smaller updates will always result in a read-modify-write sequence which further decreases the write throughput.

In the case of TSFS, the main limiting factor on write performances is the metadata update overhead. This is why write performances decline as the access size gets smaller, as is the case for all file systems. In the case of TSFS, the decline in performance is less pronounced for the following reasons:

- TSFS supports small sectors down to 256 bytes which makes for very low write amplification even for small access. By contrast, the minimum sector size for Yaffs is 1KiB, which explains why smaller access are much slower.

- The commit overhead is very small (around 32 bytes). By contrast, Yaffs must write a whole additional sector each time a file is closed or synchronized after a write access.

Read Performances

One of the performance benefit of NOR flash is low read latency and very high throughput, up to hundreds of megabytes per second for the fastest bus configurations. Fast access compounds with another natural advantage of NOR flash which is the support for arbitrarily small accesses. In the end, the potential for read performances at the file level is very high, something that file systems leverage to different degrees.

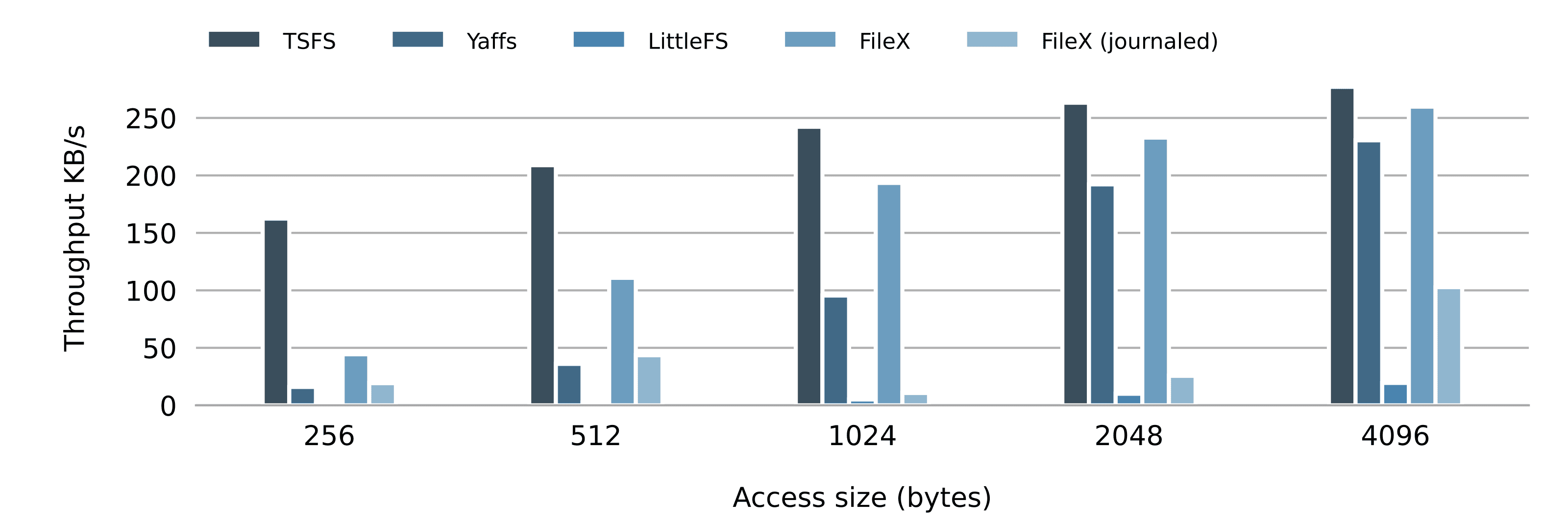

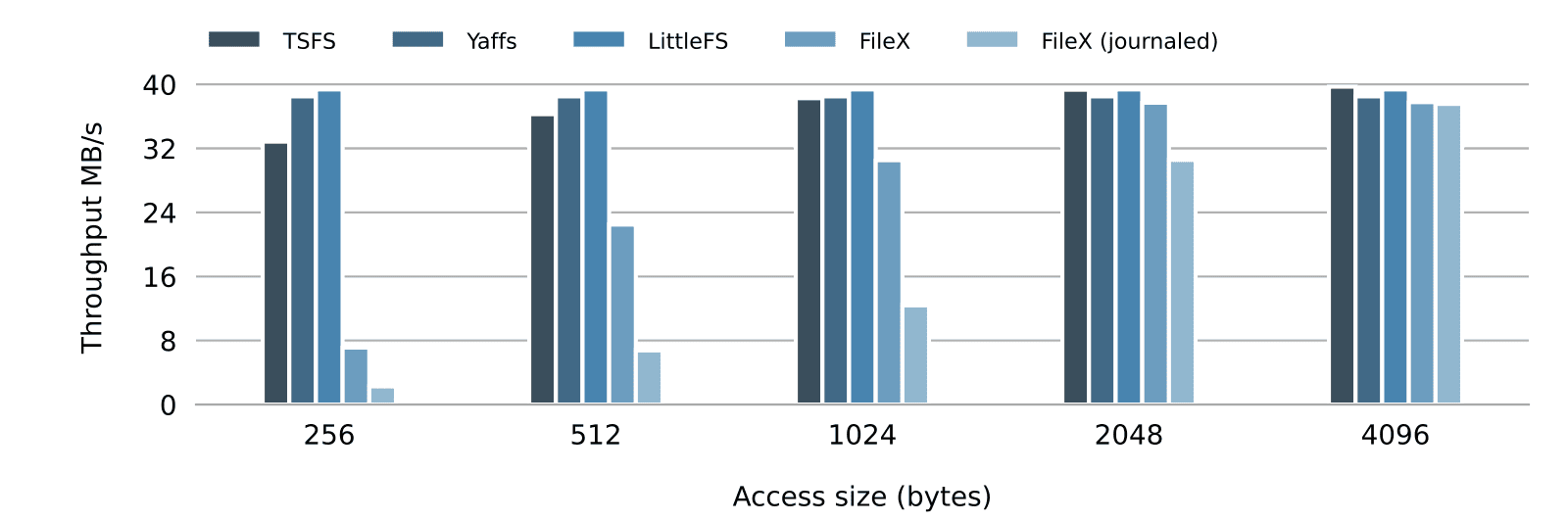

Figure 5 shows sequential read throughputs for each file system and for various access sizes. Before the actual benchmark, the test file is created and sequentially filled until its size reaches 8MiB (half the device size). During the benchmark, the file is sequentially read multiple times for each access size. The file is kept open all along, so the file lookup time is not measured, only the access time. Other than that, the various file system configurations remain the same as they were for the write benchmarks.

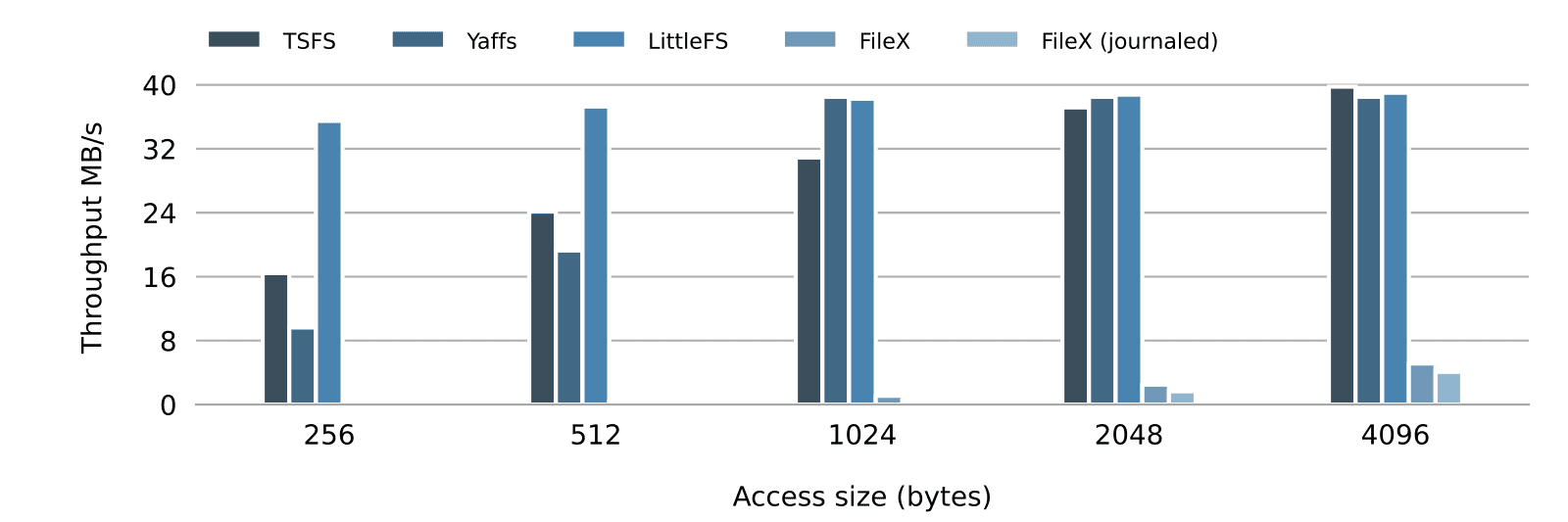

Figure 6 shows uniform read throughputs for each file system and for various access sizes. The conditions for the test are the same as for the sequential read benchmark.

In both sequential and random read benchmarks, FileX stands out with the poorest overall performances. In the sequential case, we can see that FileX does significantly better when the journal is disabled. It is somewhat surprising that journalling would affect read performances like that. It all comes from the fact that FileX, in fault-tolerant mode, performs out-of-place cluster updates, which means that even when the file is sequentially written (as is the case in the benchmark setup phase) clusters are not sequentially allocated. The result is more time spent following cluster chains during following read accesses, and a consequential drop in average throughput.

LittleFS, Yaffs and TSFS offer excellent overall read performances. LittleFS is remarkably fast when it comes to small random read accesses, especially given the absence of caching. Such high read throughputs, are possible because LittleFS reconstructs indexing structures (skip lists) after each update, something that, unfortunately, contributes to poor overall write performances.

Conclusion

In this article we presented, in depth, the features, advantages and disadvantages of four popular high reliability, low footprint, embedded file systems. The article highlights the old adage that everything is a tradeoff, especially in terms of file system on resource-constrained devices. However, we hope to have shown that choosing an optimized solution can considerably improve the performance and longevity of an embedded flash storage solution.

As usual, thank you for reading. If you need advice on anything related to embedded storage or embedded systems in general, please feel free to contact us or take a visit to discover our very own file system TSFS.